Overview

Note: this module requires an internet connection upon first use to download correct libraries.

Overview

PAMGuard’s deep learning module allows users to deploy a large variety of deep learning models natively in PAMGuard. It is core module, fully integrated into PAMGuard’s display and data management system and can be used in real time or for post processing data. It can therefore be used as a classifier for almost any acoustic signal and can integrate into multiple types of acoustic analysis workflows, for example post analysis of recorder data or used as part of real time localisation workflow.

How it works

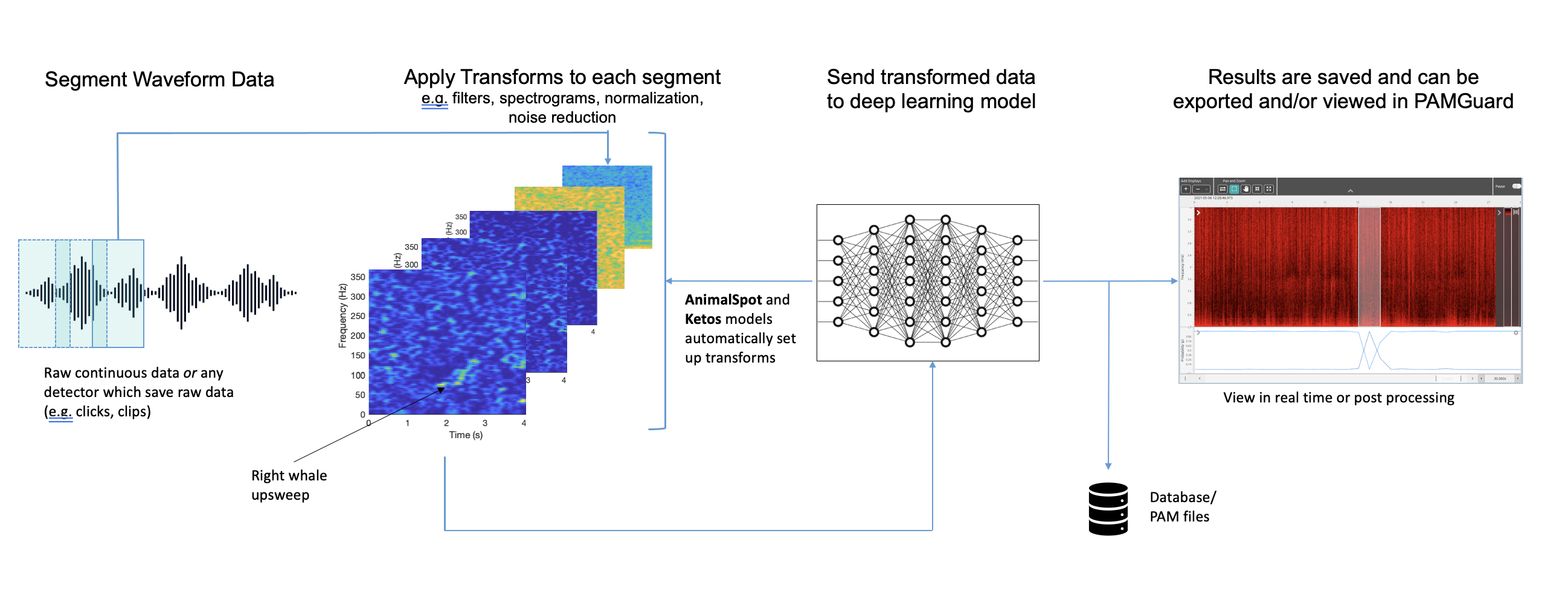

The deep learning module accepts raw data from different types of data sources, e.g. from the Sound Acquisition module, clicks and clips. It segments data into equal sized chunks with a specified overlap. Each chunk is passed through a set of transforms which convert the data into a format which is accepted by the specified deep learning model. These transforms are either manually set up by the user or, if a specific type of framework has been used to train a deep learning model, then can be automatically set up by PAMGuard. Currently there are three implemented frameworks

A diagram of how the deep learning module works in PAMGuard. An input waveform is segmented into chunks. A series of transforms are applied to each chunk creating the input for the deep learning model. The transformed chunks are sent to the model. The results from the model are saved and can be viewed in real time (e.g. mitigation) or in post processing (e.g. data from SoundTraps).

Generic Model

A generic model allows a user to load any model compatible with the djl (PyTorch (JIT), Tenserflow, ONXX) library and then manually set up a series of transforms using PAMGuard’s transform library. It is recommended that users use an existing framework instead of a generic model as these models will automatically generate the required transforms.

AnimalSpot

ANIMAL-SPOT is a deep learning based framework which was initially designed for killer whale sound detection in noise heavy underwater recordings (see Bergler et al. (2019)). It has now been expanded to a be species independent framework for training acoustic deep learning models using PyTorch and Python. Imported AnimalSpot models will automatically set up their own data transforms and output classes.

Ketos

Ketos is an acoustic deep learning framework based on Tensorflow and developed by MERIDIAN. It has excellent resources and tutorials and Python libraries can be installed easily via pip. Imported Ketos (.ktpb) models will automatically set up their own data transforms and output classes.

Koogu

Koogu is a Python package which allows users to train a deep learning model. Koogu helps users by integrating with some frequency used annotation programs and provides tools to train and test classifiers. Imported Koogu models (.kgu) will automatically set up their own data transforms and output classes.

PAMGuardZip

PAMGuard zip models consist of a deep learning model (either a Tensorflow saved_model.pb or PyTorch *.py model) alongside a PAMGuard metdata file (.pdtf*) within a zip archive. The metadata file contains all the information needed for PAMGaurd to set up the model. PAMGuard will import the zip file, decompress it and search for the relevent deep learning model and metadata file then set up all settings accordingly. This framework allows users to easily share pre-tested PAMGuard compatible models.